Most search-and-rescue operations are still done by people trained for it, but robots and other machinery have been gaining ground there as well. It still falls to humans to guide them though. Now, however, some artificial intelligence is starting to leak through.

Not that this wasn't happening before. AIs have been a major focus for robotic scientists and technology experts in general for many years at this point.

Still, autonomous machines and robots can only do so much. Truly automatic contraptions can do only some simple tasks, while semi-autonomous is usually synonymous with remote-controlled.

We're making abstraction of the few home robots that can do stuff like walk and respond to pre-recorded sentences. Those had to sacrifice certain things, like size and the ability to lift objects, in order to achieve their traits.

The new ability of search and rescue robots

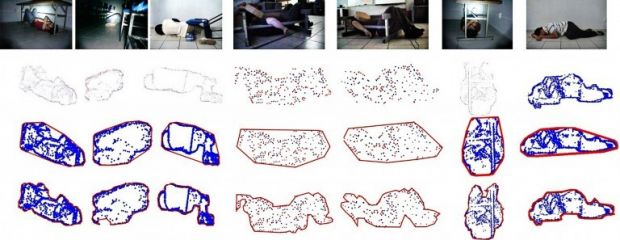

A team from Mexico's University of Guadalajara (UDG) has created an algorithm that can grant robots the ability to distinguish between people and rubble.

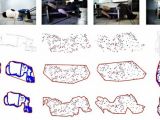

By using a combination of camera, sensors and a laser plus infrared system, the robot can create a 2D map of the environment and plot paths through wreckage, all the while taking actual photos of the area through the stereoscopic HD camera.

You don't even need an LED flash in it, since the robot carries its own flashlight to provide lighting. The photos are examined by the algorithm to recognize people, if any.

The currently unnamed robot is based on the FirstLook and was made by the folks at iRobot. It doesn't have the ability to right itself if it falls on the side, but with its improved pathfinding ability, the odds of getting stuck should be quite low.

It's possible to have the software running on a laptop, but if the robot has decent enough processing hardware, it will be able to take shots, obtain 3D points to segment and apply numerical values to the captured images, while using a filter to determine if any shape in the photos is human or not. Pattern recognition is what it's all about.

Speaking of which, the shapes will be used to train a neural network into the methods of recognizing those patterns more easily.

Future plans for the robot

The team from UDG intends to train the robot to automatically classify human shapes based on previous experience. That way, you may have a whole swarm of Wall-E's ready to wheel through every crack and nook in a disaster area and send location data for everyone they find.

14 DAY TRIAL //

14 DAY TRIAL //